Years after the advent of decent VR hardware, there are still precious few ways the technology has been employed as anything other than a game or gimmick. One team at NASA, however, has been assembling useful science and engineering applications, with promising and unique results.

Studying the astronomical number of stars in our galaxy is generally done using legacy tools, scattered databases, perhaps even paper and pencil. And as such it can be hard to use that great multi-purpose pattern recognition engine, the human brain, to full effect on the information.

Tom Grubb, an engineer at NASA’s Goddard Space Flight Center, has felt for years that VR and AR are valuable tools for exploring and working with this type of data, and his team has just presented its first paper directly resulting from using those technologies.

He and his colleagues used a VR environment to examine an animated stellar neighborhood, and arrived at a novel classification for a star group other astronomers disagreed on. Being able to intuitively observe the stars’ paths and positions in a three-dimensional space provided the key insight.

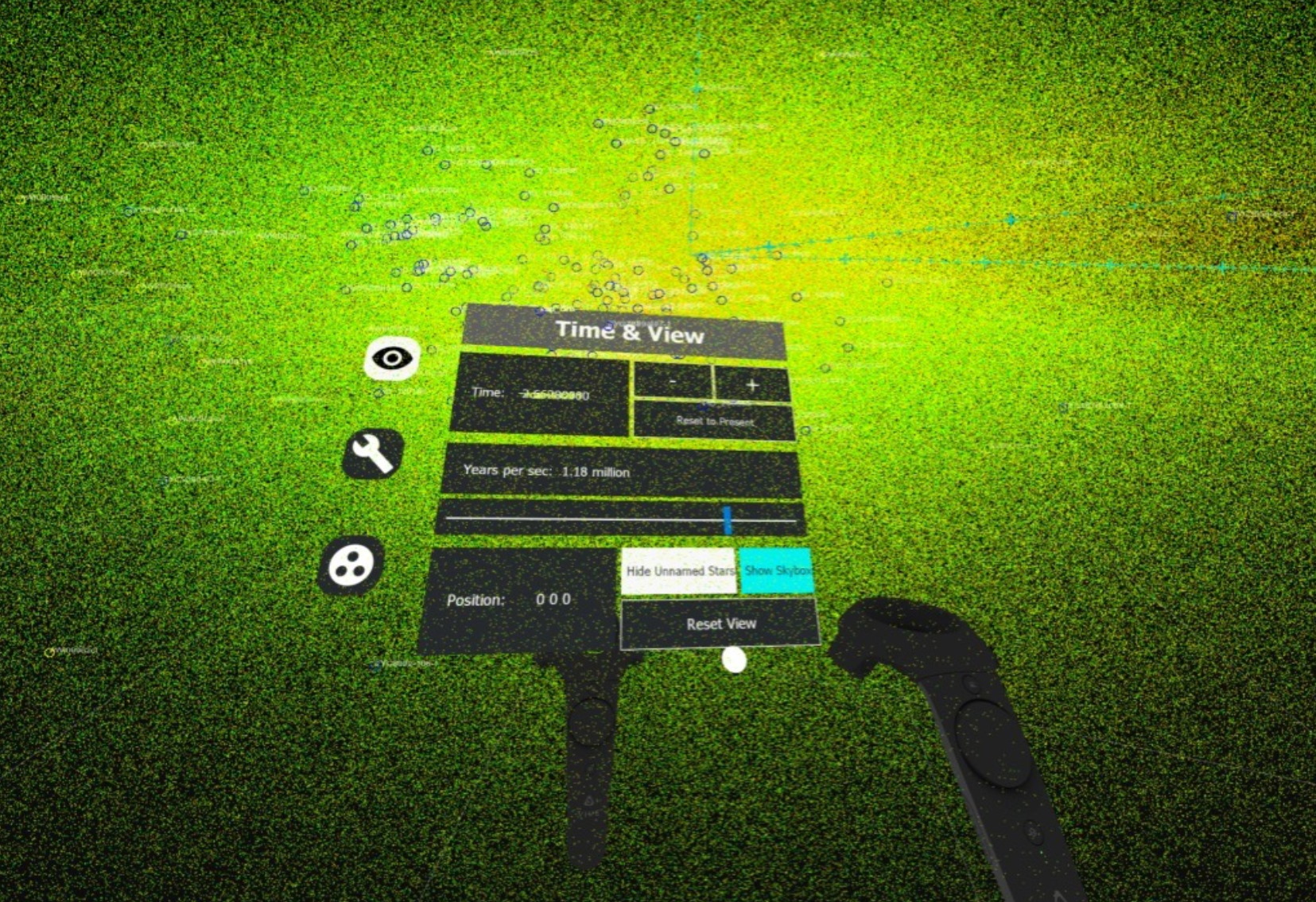

An example of star data displayed in PointCloudsVR

“Planetariums are uploading all the databases they can get their hands on and they take people through the cosmos,” said astronomer Marc Kuchner in a NASA news post. “Well, I’m not going to build a planetarium in my office, but I can put on a headset and I’m there.”

Grubb and the team have created a number of software projects to help bring not just astronomical databases, but engineering work into VR. Just as heavy industry is learning to incorporate VR and AR into their safety, maintenance and training routines, NASA is looking into it in engineering and cross-site collaboration.

Part of that is just establishing basic tools for viewing and manipulating the data.

“The hardware is here; the support is here. The software is lagging, as well as conventions on how to interact with the virtual world,” Grubb explained. “You don’t have simple conventions like pinch and zoom or how every mouse works the same when you right click or left click.”

But once you have people in a virtual environment looking at a 3D star map or representation of a probe’s interior, there are new opportunities to be discovered.

“We’ll be in the same environment and when we point at or manipulate something in the environment, they’ll be able to see that,” Grubb said. “You still have to build mockups, but you can work out a lot of the iterations before you move to the physical model. It’s not really sexy to the average person to talk about cable routing, but to an engineer, being able to do that in a virtual environment and know how much cabling you need and what the route looks like, that’s very exciting.”

The work is ongoing and the paper describing the team’s first astronomy results should be published soon. And of course the work they do is often released publicly, for example the PointCloudsVR tool they use to review star and lidar data — you can download it all on GitHub.

from TechCrunch https://ift.tt/2RZMOMv

Comments

Post a Comment